AI Proliferation and Organizational Design

automation, RPA, and distribution

I’m going to be spending plenty of time in the next couple months chatting about the product innovations, but first I thought it might be useful to set out some of my hunches on where AI is going.

Is the future General Purpose or Special Purpose LLMs?

Sam Altman had an important interview a couple weeks back where he mentioned that we are going to be using GPT-4 for quite a bit and further innovation is going to be slower.

You can probably take different stances on what he’s conveying: GPU and training costs need to come down, don’t bother trying to compete with us, or that transformers can only take us so far. Most likely, we are just hitting the realities of the S-curve.

And this raises an issue for thinking through AIs in the enterprise. The biggest question for enterprise value these days is mostly around a) reliability and b) data privacy concerns. Both of these appear to be partly solved not simply by better GPTs, but by special purpose LLMs that can fix the reliability and often data privacy concerns.

We know how to train models - the secrets of transformers have been revealed. And even finetuning general LLMs can sufficiently unlock the reliability required in select use cases without necessitating billions of dollars of training costs for a better general model. So for most companies: the question is going to revolve around ROI. Do you wait for better GPTs? Or do you onboard myriad vertical LLMs and finetuned models? It’s clear to me that the ROI with vertical LLMs is sufficient to start onboarding.

In other words, I think it’s relatively safe to imagine vertical AIs proliferating and quickly the innovation cycle quickly marching towards LLMs for vertically specific use cases.1

These LLMs will impact work-product and at least in the near term will find their utility in the hands of specialized labor acting as a productivity booster.

Fundamentally, we may be reinforcing Adam Smith’s market theories: specialization, even at the level of compute matters.

So assuming this pragmatism is correct, we end up with a completely different set of deployment issues to think about. If GPTs were alone sufficient, we could imagine implementation looking more like cloud migration. Painful, but once it’s done, it’s mostly done. Instead, hyper-specialized AI deployment looks far more like software deployment replete with integration challenges across myriad software products, myriad AIs, and plenty of data security and AI reliability concerns across the landscape.

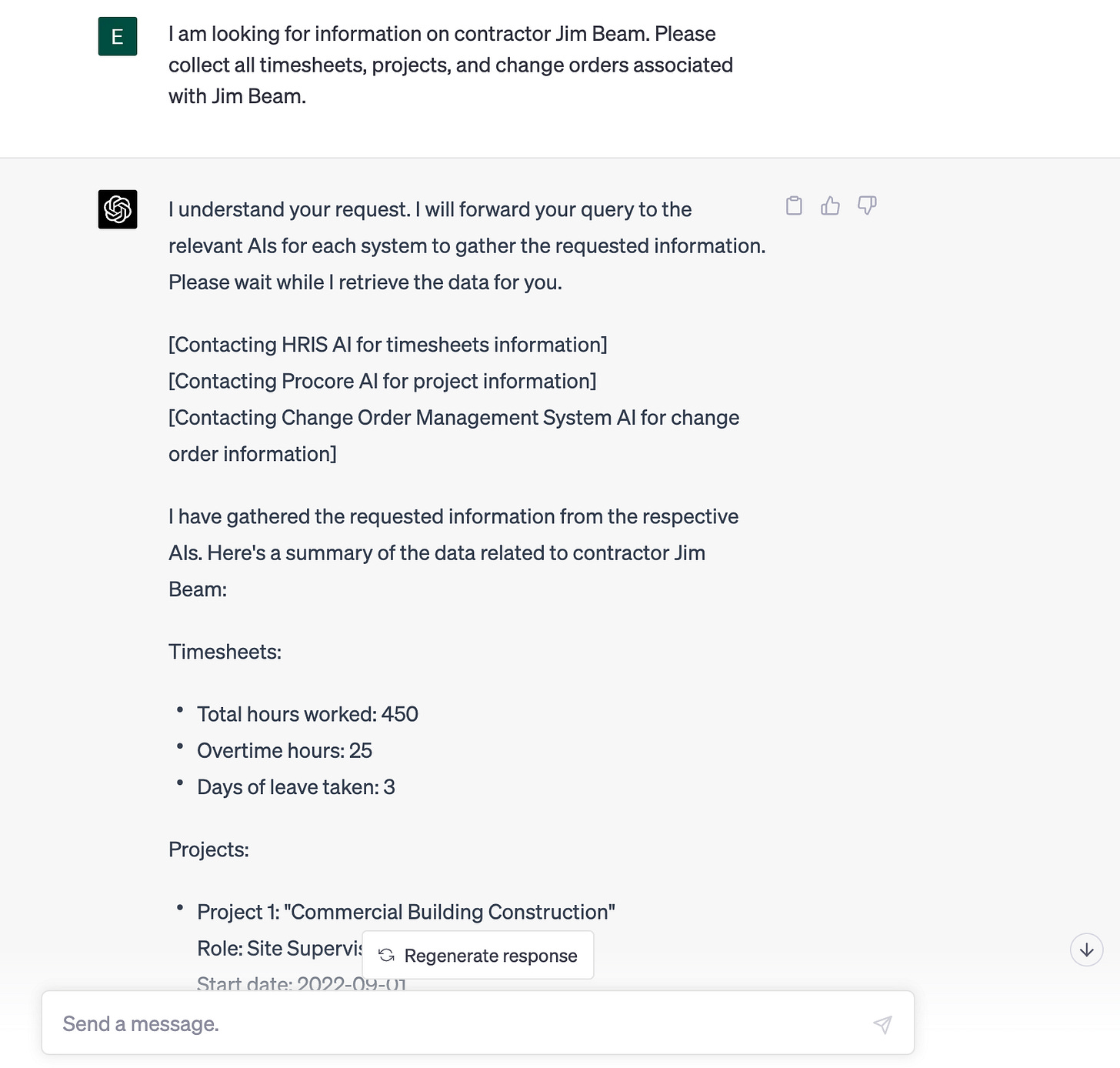

It’s plausible that each existing software product becomes infused with AI, new AIs get deployed on top and across products, and we end up with organizations solving the reliability problems by having general AIs interface with specialized AIs - like this example below.

This is a somewhat trite example that could potentially be solved by "GPT plugins,” But, the more complex, nuanced, private, and unstructured the data or work-product, the more industries and enterprises I’d imagine will favor this approach.

Adoption Challenges

My hunch is that many of the problems that we have thus far encountered in cloud and software adoption will also need to be solved for businesses of all types, but with a larger scope. If most companies became tech adopters in the past 20 years, soon every company will move to become tech-enabled.

But while the products get better, the central question will quickly become one of organizational design: how should you go about managing a tech-enabled company?

And ironically, the places where AI could have the most impact are often the places where digital stewardship is not great and management isn’t necessarily used to interfacing with software at all. The software might be extremely legacy, the organization might be run in Excel and Word, and there’s never been a reason to think through securing organizational data - it doesn’t ever leave.

That means in many industries, the existing software and IT strategy will also have to be replaced in order to benefit from cloud-deployed AI models and new software. In other words, the challenge for vendors is quickly going to become a distributional one. Soon businesses will be drowning in AI-generated sales messages for AI products trying to ascertain how to form a cohesive business strategy around the brave new world and determine which models, which processes should be automated.

Most organizations are going to require not only fundamental change in their business practices, but also most some level of re-architecting their entire digital asset landscape to take advantage.

RPA and Automation

It’s useful to consider some of the challenges RPA has encountered in organization penetration. RPA and AI are somewhat dissimilar and while I don’t think it’s entirely the right framework, there are some important similarities.

RPA in my mind is perhaps the best examples of a technology that’s disruption was far more concentrated than one might anticipate from its original hype.

Hyper-automation sounds awesome. Software robots sound incredible. But while there’s definitely been cost-savings associated with RPA uptake, it’s mostly been concentrated to enterprise companies, where the existing software is so bad that any automation can make a difference. And in the mid-market, up until recently there’s been very little impact.2

RPA’s premise revolves around using software scripts to automate business systems. That’s really powerful, yet has often found its fit in hyper-legacy business systems. It’s patchwork on out-dated digital + organizational design.

The bear case for AI might be something similar: organizational design problems are not software problems. Organizations whom could most benefit from AI, might need to spend several years performing migrations over to new and better systems in order to experience the productivity gains from AI.3 In the absence of these systems changes, simply having AI might add some cost-savings benefit, but it might not fundamentally rewire productivity: the art of doing more with less.

AI in most cases is not infrastructure, it’s reliant upon it. It needs transposition layers to render useful actions - a layer that transposes AI generation into useful business value. If the transposition layer is a human, that’s fine, but it won’t deliver as much productivity impact as when the transposition layer is software.

For AI to be truly powerful, it will rely upon coordinating as much data as possible, inter-stitching different AIs with different context, and streamlining the number of actions a human agents must take in order to produce value.

To the extent most organizations are not well integrated across systems, reliant upon humans to stitch together organizational context and pass work-product across systems and people, we might end up with something powerful, but still not the productivity dream.

In short, with a proliferation of models will come new mandates to rethink organizational design, rethink systems architecture, and new strategies around integration pathways for tech/AI-enabled organizations to flourish.

The context window and the increased need for iPaaS and integration strategies.

Peter Schroeder had a fantastic piece the other week in his brilliant newsletter, The API Economy. The basic point? AIs and APIs will go hand in hand.

If you look at GPT-4, its biggest unlock was probably not the model size; it was the context window. ChatGPT3 could process 4,096 tokens (about 8,000 words). ChatGPT4 can process up to 32,768 tokens (64,000 words).

The more context I give an LLM: the better and more accurate the output. Conversely, the less context I feed the LLM, the more the model tends to predict something false: a hallucination.

In enterprises, the key question will be around how to give AIs the proper context.4 My theoretical general AI with vertical AI sub-routines is one path, but it presupposes robust integration. Said differently, if you need RPA to band-aid over integration issues, you might have a problem feeding LLMs context.

In my estimate, this means that iPaaS platforms are some of the best positioned to help complex organizations with AI approaches - they’ve solved one of the tricky dilemmas. The data and paths are normalized and this could yield AIs that sit at the iPaaS level capable of executing across complex systems.5 This also means that I think iPaaS systems will quickly become one of the most valuable products In this next tech super-cycle. Since AI utility will depend upon robust integration in some form or fashion, iPaaS and AI procurement will go hand in hand.

Lastly, I think this all means that consulting and IT services will enter another critical era. Many organizations where AI could be incredibly powerful do not have the CIO or IT resources to do this alone. And software vendors might not be positioned to effectively help organizations do this sort of design across the org across myriad software and AI initiatives.

We are going to enter a new super-cycle hyper-focused around vertical AI, vertical software, and a mixture of data and horizontal products that can knit together other business processes. But no software or AI stakeholder might alone be capable of solving organizational design challenges that lead to massive productivity increases. Designing GTM strategies with consulting partners will be of the utmost importance to realize the full productivity that this super-cycle could provide.

Since Twitter and Substack are engaged in the world’s most pointless standoff, an image of the relevant tweet and a link to the thread will have to suffice.

For similar IT strategy and resourcing constraints. The distribution model has migrated towards managed services in conjunction with consulting partners.

In other words, it’s still very much the software era, just with a different end mandate.

The context window and an AI having organizational context are somewhat different but I am happy to blend them together here. I think they will quickly become one and the same problem.

Unified APIs vs. iPaaS could have very different strengths here. You could imagine secured iPaaS layers that sit at the company level could yield private AI models that operate across an entire company’s context.