Compute Markets could get really weird

I reserve the right to be wrong about everything below. This is an AI-free essay about AI (except for the occasional citation and the TL;DR section.)

TL;DR

Global GPU capex grew from $28 billion in 2023 to roughly $110 billion in 2024 with demand still outpacing supply and trillions in investment expected by the 2030s.1

Buyers treat GPU hours as fungible, leading to commodification.

The current take‑or‑pay forward commitment-style contracts dominate today, but they crowd out startups and make build‑outs lumpy. Spot markets are inevitable and already appearing.

Financialization will ensue with potential ramifications on further commodity markets around the model layer.

As a result, it’s theoretically plausible that application layer companies end up functioning as pseudo-trading desks for their customers.

In essence, the Bitter Lesson is: “don’t bet against scaling laws, more compute is all you need.” It turns out that nearly every problem is distillable to leveraging generalizable compute against it.

In the past 5 years, this has led to an inexhaustible demand for compute, in the form of GPUs, that is now being financed to the tune of well over $100 billion and climbing via datacenters.

This inexhaustible compute need and the competition amongst data centers compels the demand side to try to treat compute as fungible. Since no one provider can serve the theoretical demand of all customers or potentially even one foundation model company’s demand, customers need paths to leverage compute wherever they can find it, with minimal differentiation in the experience.

Datacenters have nearly no choice but to embrace this desire given the sheer amount of usage they need to drive inside of their centers. As Evan Conrad puts it, there are certainly distinguishable attributes within compute clusters; compute is not a pure commodity yet, and SKU, locality, and compliance differences still matter, but distinguishable attributes don’t lead to companies prioritizing a single datacenter provider while paying a premium for value-add on top of compute. If compute is all you need, the correct strategy is nearly always to spend incremental dollars on raw compute vs. ancillary services from a particular data center provider.

Oil and gas financial markets are designed to smooth out the inherent volatility of a market fraught with peaks and valleys, interday spikes, weather, and a whole litany of other problems.

Further when you have inherently spiky and volatile commodity markets, it becomes destructive to equity value to finance this risk on your balance sheet. Financial markets exist to transfer that risk to parties better equipped to hold it.

One possible theory you could have about compute markets is that due to novel AI business models, compute is starting to look less like a predictable and linear market where demand can be easily forecast and planned for (and treated with a simple SaaS equation) and much more like a market with inherent peaks and valleys.

The end result is obvious: compute will be commodified. I don’t simply want to repeat what Evan says in respect to this commodification, but we do need a working understanding of what’s leading to this compute commodification before getting into the wonkier ramifications.

Commodification in a nutshell:

The demand-side for compute is incentivized to procure compute as close to the cost of running a cluster as possible. This is a function of how much compute is demanded. There is simply not much value-add that can be had from software on top of GPU clusters vs. simply buying more GPU clusters with the same spend.

This naturally pushes the margins that can be had on providing a GPU cluster down aggressively to the pure cost of running GPUs. Margins mirror commodities.

As a result, strategies around running commodity resources tend to triumph.

First, this means that commodity resources prioritize selling forward-commitments to their customers. This locks in demand guarantees for 3-5 year terms, guaranteeing margins and revenue for datacenters that must produce billions in capex on depreciating hardware to mount a viable business.

Second, this initially means that commodity markets are wildly inefficient. Because forward commitments dictate whom commodity providers will do business with, the demand side must initially come with tens if not hundreds of millions in forward commitments revenue to be guaranteed any allotment of the commodity.

We should stop here and simply say: this is terrible for everyone on every side of the market.

If we play the ramifications of “pure forward commitments” out, a couple things become very clear:

In AI, datacenters are purely a function of their forward commitments revenue. Very bad for business flexibility. This makes datacenter buildouts look lumpy. Incremental demand can only be captured if you build out another 10,000 GPUs, as opposed to another 100.

The demand side must show up with tens of millions, if not hundreds of millions of dollars to spend. This is incredibly equity inefficient. In the case of a foundation model, this makes sense, they have the billions in revenue to justify forward commitments, but it essentially freezes compute-hungry startups out of the market or forces their investors to inject equity into companies at hefty valuations in order to purchase forward commitments.2 This is bad for investors, companies, innovation, and their own customers.

This one is more debatable, but let’s entertain it for now: End customer relationships are wildly inefficient around price discovery for their compute. You could certainly take the stance that this is a good thing for the application layer (or even if it’s bad, that it will continue), however, I’ve got some questions.

First, this equilibrium cannot last if model capabilities continue to converge and themselves look to commoditize. I’ve potentially got two layers of commodification now: GPUs and models.3 This should be a boon to the application layer, but likewise will fend off huge gross margin gains on tokens. There is potentially more value add here, it just probably isn’t directly in token spend.

B2B companies are already increasingly sensitive to compute spend. As compute spend ramps up, why would we expect them to be apathetic to price discovery for the raw cost of their compute. This is fundamentally why I don’t expect growth marketing tactics to work in the AI era for most B2B work around token spend. Cf. Cursor running negative gross margins. The minute you quit subsidizing tokens for B2B audiences, the more I think you risk them switching off you altogether.4

Apres Moi, Le Deluge

In every single commodity, these dynamics prove untenable. And so first, spot markets form, second financial instruments form on top to reduce pricing and duration risk for all market participants.

Spot markets are intuitive enough. Oil and gas route a certain volume of production through pipelines via forward commitments. Any excess production gets sold via spot markets to those who need today’s5 production.

Your datacenter, even with all of its forward commitments, has excess GPU hours at any given point in time. It turns out that nearly every datacenter has excess GPU hours; and it also turns out there’s no end in sight to the datacenter buildout. And so a spot market forms. Any volume not privy to a forward commitment currently in use, your datacenter is now willing to sell at whatever the going rate is for compute.

SFCompute and Prime Intellect are notable spot markets that have formed. It’s now viable for a startup, university, or company to get access to GPU hours with no forward commitment needed.

The minute you have spot markets, you now have some reasonable price discovery.6 A data center may discover that demand for spot is such that they should sell less forward commitments in the future. If the price for spot is going down, it makes sense to allocate more GPU hours into forward commitments to reduce price risk. None of this is novel.

In fact, this basic intuition forms the entire case for Western commodity futures markets. Compute futures become possible. Even prior to regulated OTC futures, you can imagine various scenarios like the following and apply it to compute markets:

Let’s take oil and gas where markets are more liquid and easily understood.

I’m an upstream oil and gas company. I drill for oil and I sell it. Spot market prices are going up. I want to sell more gas on the spot market for $50 and I think it’s going to stay there for a while, but unfortunately, I have a lot of forward commitments at a lower price that I’m obligated to fulfill say at $40.

What I would like to do in theory is get someone else to take over this contract for me (it’s a commodity after all, the customer doesn’t care who’s drilling the oil), and allocate more of my volume to spot market sales to maximize profits. I’m looking for someone that wants to take over this contract because they are confident that spot prices are going down. What I view as a $10 loss, they instead view as a $10 gain as they think the spot price is going down to $30 by the time this contract must be fulfilled.7

And so they purchase a swap: they will now take on the obligation to sell at $40 dollars in 3 months and take on the price risk that the spot market may actually be $50 still. They hedge their risk, maybe for a certain portion of barrels, and suddenly we have a ton of different parties all financially gaining or losing off of the underlying forward commitment vs. spot price dynamic. And now, we have a full financial sector in oil and gas and throughout commodities that’s sole task is to perform price discovery, reduce duration risk, create liquidity, etc.

In compute, this is not the case today at all. After all these datacenters come online? It’s looking far more likely.

There are simply so many factors that move us towards the financialization of compute and having it trade more like a commodity. And more importantly, it could get even weirder:

The single biggest factor is energy. Energy costs, which make up a significant component of GPU runtime costs, fluctuate.8 Daily ERCOT nodal prices regularly swing by more than 100 percent; those swings feed directly into marginal GPU‑hour economics. You’re also talking about energy demand inside of these datacenters potentially meaningfully impacting energy costs on the whole. GPU markets will likely want/need to fluctuate to account for the variable costs of energy. By the way, tons of the sophisticated energy sector firms experienced in commodity markets are heavily involved with these data centers buildouts.

Compute isn’t just about pre-training anymore. Test-time compute, RL, eventually test-time learning, and other things have moved a significant amount of compute from pre-training runs that are only directly meaningful for huge foundation models into post-training and inference strategies which are increasingly the dominating paradigm. This impacts the volatility of compute spend within consumers (both AI agent companies and enterprises): These can of course be normalized in contracts through forward commits, but every party even inside of these forward commits deals with upstream risk.

Open source models continue to get better - and it doesn’t look like the pace is truly stopping either. Recent releases of Qwen 3 and Kimi K2 show a 6-99 month lag between SOTA and open source models. OpenAI just released their OSS model looking at performance on par with o-4 mini with tool-calling support and more. All of these models are sufficient options for engineers to perform interesting RL, finetunes, and more upon. If this trend continues, model commodification also looks likely leading to models themselves fluctuating more with GPU costs and spot markets.10

Even if you are skeptical of OSS as commodification factor, we now have Zuck and Elon newly committed to winning, and no single foundation model companies with an overwhelming general advantage and all with a pseudo-specialization advantage in the short term. Due to intense competition, data needs, and the need for revenue to support these businesses, my guess is no model company is positioned to shut off their APIs until AGI occurs. Further, if and when they adjust token pricing strategies, it’s plausible to me that they will allow these to fluctuate leading to even more financialization of the sector. We will touch on both of these factors more in a second.

After Compute, the Models?

To touch on the commodification of the models point for a second. AI and language models exhibit very weird competitive dynamics and it’s anyones guess on how this ends up. I take for granted a couple things:

Everyone is essentially training on the same data.

Everyone is essentially within the same fault tolerances for errors.11

Most of the qualitative differentiation stems from different company’s personification of their models or unique toolsets as opposed to fundamental model gaps:

a. Open AI: Therapist and Self Actualizationb. Anthropic: agent and coding specialization

c. Grok: anime waifu chronically online fact checker

d. Gemini: work productivity

The minute an evaluation or market capitalization opportunity presents itself from one model, nearly all the models are able to perform that eval or market opportunity within 6 months. Cf. IMO Gold Medals.

Ultimately then, model personas are the real moat in the short term for any model company. But can this resist commodification as every incremental model reaches parity with one another?

This of course partly depends on your AGI timelines. None of these commodification forces matter at all for the company who reaches AGI.12 All bets are off at this point, and throw this article out the window. But if they don’t, what happens?

If the models get commodified, nearly everything we know about software and value add gets crucified.

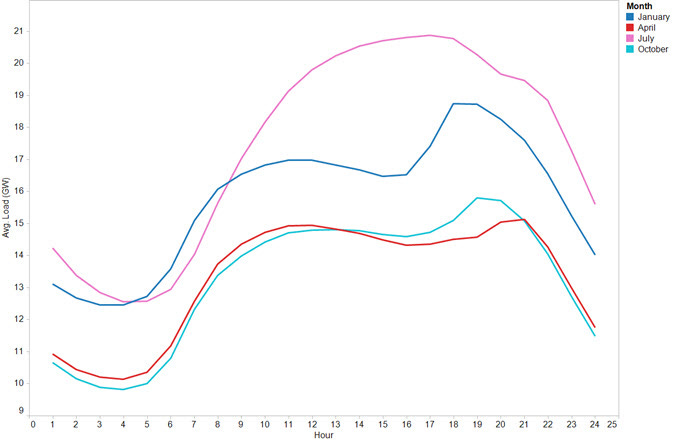

First, model commodification could result in weird inference costs and accompanying arbitrages. I take it as granted that model usage is not stable throughout the day. There are peaks and valleys.

Foundation Models currently offers a pseudo-”spot” price for tokens. This spot price currently does not fluctuate whatsoever. What if this changes as demand goes up, GPUs remain constrained, model capabilities are relatively commodified, and the volume of potential GPU usage at any given time dwarfs the total inference that Anthropic can support?

Well in this theoretical world, we would already expect GPU costs per hour to fluctuate throughout the day based on energy prices + the current compute load.

And likewise, models certainly fluctuate in usage throughout the day. If I had to guess, peak load is probably not 6:30 ET or 3:30 ET.

It’s at least plausible that models may wish to induce demand during low cost GPU hours, and raise prices to maximize profits during high GPU demand hours.

In this world, models are semi-fungible and I as a user can have my demand induced to some other model by having a lower per token/inference price if I choose to run some agent process at a different time in the day.

And what do you know, I now have another sort of commodity market that’s viable.

Ultimately, these commodification forces may simply result in the ultimate compute commodity market. Everyone’s leveraged to the GPU hour price, everyone’s margins are compressed on top of GPUs, the models themselves are compressed by innate competition and open source, the agent companies are compressed by compute-hungry organizations who want as much compute as possible.

This is a weirder world. But it’s not necessarily a bad one. If these factors occur, we are likely talking about the total amount spent on inference exceeding hundreds of billions of dollars annually, with steady model improvement, and incredible revenue growth, that even with lower margins, makes lots of people, lots of money.

But to draw some of the implications out: it does mean that application layer companies may want to prepare for a weirder future. First, if these factors occur, application layer companies would in essence partly operate as trading desks for their clients. They arbitrage current token pricing, model performance, bulk token commitments, and more, and take a cut on this revenue.

Second, the financial layer around this could end up dwarfing everything else. Noah Smith has a good piece out around some of the current financial dynamics and risk in datacenters:

The only real lesson from commodity markets is that the financial markets around commodities end up dwarfing the actual market. Now what happens if financial markets are essentially indexing the entire chain of labor productivity through inference and compute? These inference financial markets could legitimately end up dwarfing everything.

And if these financial markets emerge to the extent I’m describing, Silicon Valley may be the premier trading desk capital of the world.

After the Commodification, the Crash?

Part of the reason I wanted to write some thoughts down is that when you look at nearly every important sector subject to financial markets, there’s eventually a blowup. In the case of AGI, the blowup is obvious - it’s the entire economy. This wouldn’t be a bad blowup, but it’s hard to have any notion of how the economy itself would reorganize. The blowup in the case where we don’t get to AGI and experience rapid commodification and financialization of compute and AI layers is potentially more interesting and nuanced.

In my view, it’s worth taking a look at the fracking industry of the 2010s. Silicon Valley largely did not participate, instead it was led by roughnecks drilling in red dirt with a fundamental technology innovation in the form of horizontal drilling that led to financial mania. Fracking led to the end of peak oil hysteria, the resurgence of American energy production, arguably… and somewhere between $100B and $300B in losses for the industry participants as a whole. The people who made a ton of money? Commodities traders. During this period, US commodity markets grew from 7.6T to around $14T. And as of 2021, US commodity markets are nearly $40T in annual trading volume.

After the crash, oil and gas companies got increasingly sophisticated around industry consolidation, financial hedging, and more. In commodity markets, it’s potentially as critical as being good at drilling.

My contention is simple: company strategy in the AI world will be increasingly be levered to financial sophistication around compute.

Just don’t blow up when the futures markets hit.

https://assets-cms.globalxetfs.com/CD25-AI-Infrastructure-Laying-the-Groundwork-Final.pdf

It turns out that in nearly every commodity business, this is immensely destructive to equity. See fracking and railroads.

By the way, I’m in no way convinced that model companies strangle the application layer for B2B. The race is to AGI for these guys. Application layer looks incidental towards that goal and money that could be spent on R&D should not be spent on S+M.

Some of this is a function of monopoly wars where AI application layers effectively subsidize demand capture through lower token costs and token insensitivity.

This is of course simplified.

What follows is simplified.

You could of course, envision this party not even owning drilling rigs. They just buy spot and fulfill demand. Ie, we have just invented trading desks.

Right now, datacenters have amazing energy costs stemming from stranded natural gas in the Permian and other areas. Unclear to me how this shakes out long term if compute demand continues to go up.

I’m even okay with arguments saying they’re 12 months behind.

This will always be an imperfect commodification, but to the extent that this leads companies to running models themselves (or via their application partners) on their own GPU clusters, it tends towards commodification.

The error rates work like this: Every model needs its outputs reviewed. Until the error rate is 0% on a given model at all times, this is true. Since this assumption is now baked in, a marginal difference in error rates for users is far less critical than it was 2 years ago when humans weren’t habituated to verifying and validating AI work.

In the AGI case, things get extremely unpredictable as the value add from AGI and then ASI are so severe across domains that none of this even matters. This results in a weird, theoretical graph where prior to AGI, margins are commodified, but then whomever reaches AGI first gets to infinity margins and everyone else’s margins theoretically go to zero.

The commodification here will really show up when you experience commodification of the outputs for any discrete process. If model GPT-n is SOTA, but model GPT-n-1 is all that’s needed for your particular process, in theory you would have many models and GPUs competing to fulfill your process at commodity rates. In 6 months time, your processes that are only fulfilled by GPT-n, would now be able to be performed at parity by the SOTA OSS model, and commodification newly ensues. Sawtooth compute forecasts ensue.

Hell yeah, he's back