ELDs, Samsara, and Veeva

tuesday riffs #3

Samsara and ELDs

Over in logistics, Samsara remains cruising at nearly 1.349B in ARR. It seems like a good time to revisit the trucking industry around Samsara’s core product: ELDs.

Samsara of course was one of the early companies to seize the opportunity that the ELD rule in 2015 created. The federal government looked at traffic fatalities involving large trucking collisions, most likely also looked at how many paper logs had fudged numbers, and decided that commercial truckers should adopt Electronic Logging Devices.

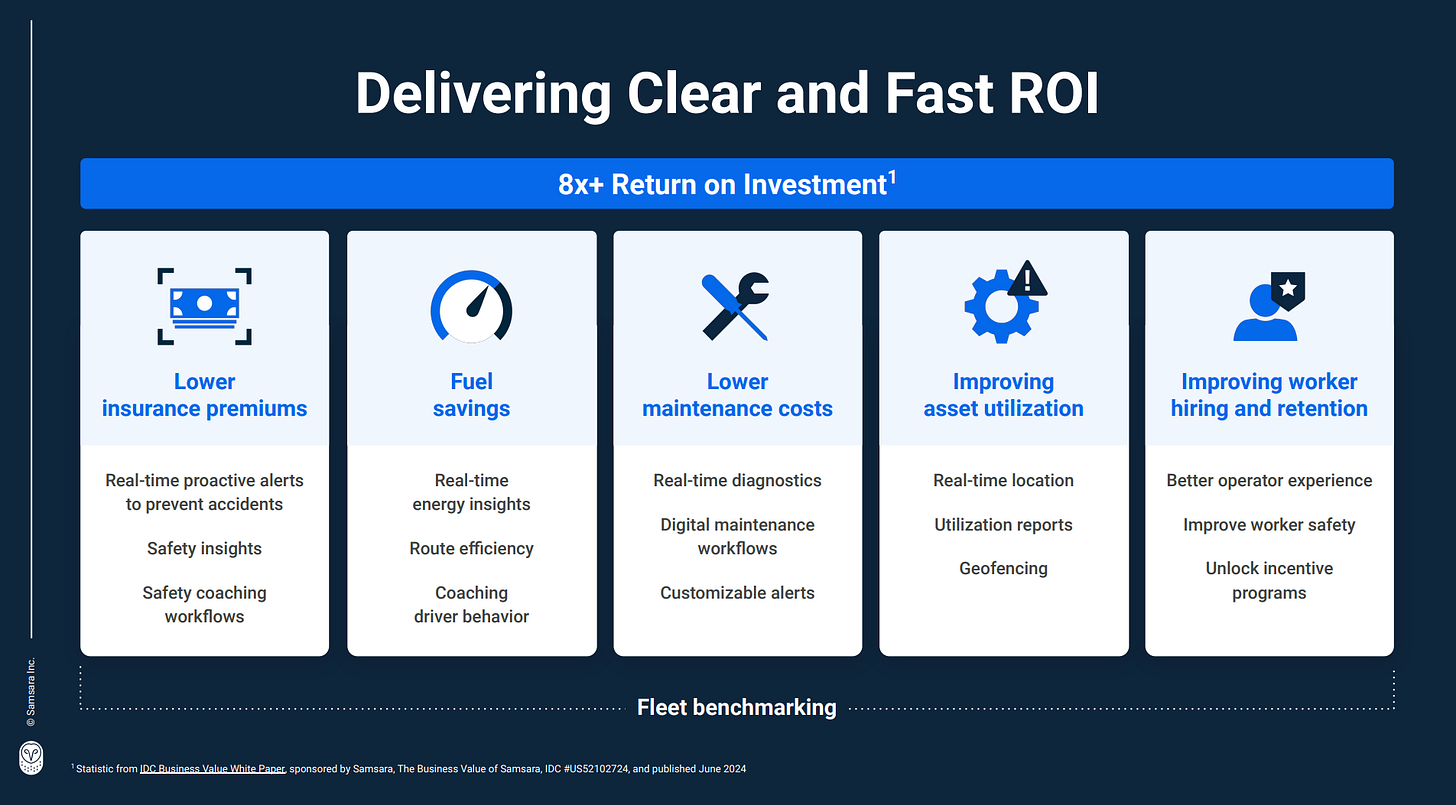

A number of companies were subsequently formed that saw this not only as a wedge into the ELD market, but really into the possibilities of better run operations. After all, the more data you have being electronically transmitted by a truck, the more you can theoretically do.

You can see this pretty clearly in Samsara’s mission statement. Safety, efficiency, and sustainability - all theoretically made possible by the ELD mandate.

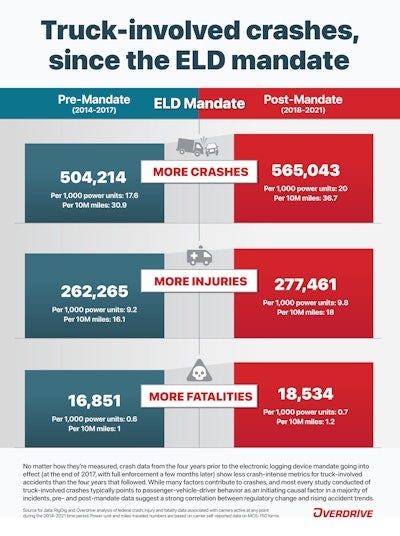

What is really interesting about this is that for the first 5 or so years post-ELD adoption (it really became mandatory in 2018), the safety data - the very reason this was mandated - was very mixed.

In fact, large truck fatalities went up even with less vehicles on the road during the 2021-22 epidemic.

This caused a group of truckers to loudly rail against the ELD devices in general. There’s other gripes as well: ELD rules are fairly inflexible. Drive over a certain amount today and you won’t be driving tomorrow. These are accompanied with pretty severe penalties that you really can’t avoid via fudging paper logs.

This data on its own is fairly interesting to speculate as to the reasons. You could posit that codifying compliance in a very rigid fashion causes truckers to act more dangerously (read speed) in their final hours before being cut off from driving. Or maybe, anytime you have a new technology and accompanying federal laws, things just take time to settle in. This was really a rehabituation around how truckers managed their driving.

And you’re beginning to see this in the data. Fatalities are now below 2018 levels.

I find this data remarkable because it demonstrates a miniature sociotechnical revolution. Truckers and companies adopt a new tech paradigm, initial results are somewhat mixed, and then slowly companies and individuals adopt to the new paradigm with greater efficiency, theoretical safety, and cost structures. And there’s probably no going back. The ROI is simply too powerful even if the mandate were to fall out of existence.

Veeva

I’ve gotta be honest, I’m a little surprised Veeva isn’t moving faster around generative AI. They’ve started the roll out of two tools, a CRM bot and a medical, legal, and compliance review (MLR) bot. These bots? Due out in late 2025. That seems shockingly late! Perhaps though this is emblematic of the dilemma vertical SaaS companies feel with AI right now. Let’s potentially use Veeva to suss out the core dilemma for system of records and AI products.

Vertical specific risk - Veeva’s entire value proposition is around trusted communications to customers for life sciences companies. And this is not something you mess around with. There’s regulatory scrutiny and potentially an increasingly hostile governmental agency to how life sciences companies communicate their goods and services. Hallucinations here carry substantial risk.

Core Risk - For vertical SaaS companies, do you build AI close to the core or do you build ancillary products around it that can interact with the core, but don’t necessarily streamline core workflows? Veeva has clearly chosen the latter. They’re focused on capturing actions that were theoretically supported by their system but not necessarily directly accessible in their platform today. This is much different than potentially utilizing AI as a core workflow for manipulating their platform.

General Stochastic Design Risk - All of this leads into the true dilemma I think vertical SaaS companies are thinking through - for the most part, vertical SaaS companies eschew stochasticity in their workflow design. In fact, the core value proposition of these companies has been basically “we bring order to chaos.” There’s been a noticeable shift in tone and mentality for AI-native companies. It’s quite literally the get stuff done era. Don’t like your workflow? Great, turn it over to our AI. Want better margins? Awesome, we have got your back.1 Claim bigger, settle faster. Turn paperwork into your patient advantage.

I’ll be the first to point out this isn’t particularly well-thought out yet (we are just riffing on a Tuesday after all). But I think it can be encapsulated by the following: right now the most meaningful vertical AI companies do not view the database, the system of record, as the point. Accomplishing the work is.2 Many incumbent vertical SaaS companies have the database, have product-market fit, and quite simply don’t fully believe that you can build a meaningful company without owning the system of record. And so perceived risk to the system of record via AI-driven workflows is viewed with hesitancy. The retort is still “we have the data” and so they can slowplay the AI disruption, avoid faulty design choices, and make sure they have no key risks to their business. But what if “having the data” is not the sole asset anymore? And what if it turns out that protecting the system of record from stochasticity, workflow disruption, and more ends up being the cause of death for a generation of vertical SaaS companies? I’m not convinced either way. But I think there’s now more and more reasons to speculate that this is a potential (albeit distant) future.

Every single technology goes through this cycle. There has been a thought that legacy SaaS companies escape the Innovator’s Dilemma. Frankly, the mentality in and around AI-native vertical companies and the mentality in traditional vertical SaaS companies I think points to a qualitative reason disruption may be coming.

All of these companies are putting rails around the AI of course. They’re very smart people! They’re just far more focused on the industry’s work itself than in building the best database for the industry.

Appreciate the thoughts at the end about stochasticity. I have been thinking about it in terms of rigidity vs flexibility. Software of last generation is rigid, with flexibility provided by users or implementation. AI promises software flexibility, as you note.

In regards to your last thought, Tidemark has good thinking here. Systems of record have multiple control points, of which data gravity is only one. Workflow gravity is another, which it seems AI can own well: https://www.tidemarkcap.com/vskp-chapter/control-points-patterns-2024