Vertical Clients

vertical AI companies and interface + agent design

Couple preliminary notes: 1. We are back. 2. You’re going to see two different types of pieces from me going forward Industries, which primarily looks at the business models made possible in industries with new technology. Verticalized, which will continue to focus upon the technology companies serving verticals.

One more note:

If this piece around AI design interests you, you should subscribe to what we are doing over at psci-labs.substack.com. More to come soon.

The critical questions in vertical technology have not changed over the past year. Your goal as a vertical operator is to capture as much of your market as possible by providing technology solutions to industries where you can effectively invest more into R&D than any individual incumbent.

What has changed is I think the product strategy underlying successful R&D initiatives in industries. And of course this has everything to do with AI.

Surface Area and Token Utilization

TL;DR:

Vertical operators must capture market share by leveraging AI-driven R&D.

“Surface area” in vertical markets now equates to “tokens” in AI—token utilization is the new key metric.

Token utilization is the primary measure of success for vertical AI companies.

Tokens quantify business tasks, reflect complexity, and help size markets—every business has a token ceiling that correlates with value.

Vertical markets demand one thing from their vendors: serve as much surface area of our business as possible.1

And when vertical companies hear surface area, they should immediately translate that into the language of AI: tokens. The only question that I really think matters for predicting the success of a vertical AI company is this:

What is the total addressable token utilization in your specific industry?

I favor token utilization over all other estimates for a few reasons:

We can price each business task or workflow in token count.

Tokens are universal across models, the more tokens used, the more complex the task.2

We can skip all the implementation details and focus solely upon the total penetration possibility. This is helpful for market sizing, keeps us honest about the state of AI, and also let’s us sketch out where the future is headed.

Token consumption will be the single defining feature of vertical AI companies going forward. The more tokens your vertical consumes, the more value you create.3

Business value is inherently linked to tokens. Every business at this point has a theoretical maximum in tokens they can consume annually. This is at least in the billions and most likely for the mid-market in the trillions.4

Agent Productization is About to Get Interesting

TL;DR:

As AI advances, the number of agentic processes will explode upwards.

The competitive edge is not in codifying every agent, but in unleashing them with the right tools, configurations, and client interfaces.

Future vertical AI success hinges on enhancing the client-side experience.

The “interface problem” means human productivity will be limited by how well we manage and interact with agents, not by the agents’ raw capabilities.

There’s one other advantage to the token utilization approach vs. first thinking in terms of industry workflows or something else: model progress is moving so fast that we may soon live in a world where AI companies cannot meaningfully “productize” all possible token consumption for their industry.5 Agents inside of verticals are semi-unpredictable. Companies will be more creative than you are at identifying where they can create new value with agents.

Your leg up is not in codifying all possible agents, but instead in unleashing them. It’s in enabling businesses with the right tools, model configurations, and more importantly the right client to manage these.

In my view, the vendors whom will win in the vertical AI age will have a substantial amount of their R&D concentrated in the client-side experience vs. the server-side. And yes, this represents an imperfect analogy. But I wager as AI continues to evolve, this will be the defining way value is created by vertical AI companies.

This is entirely because of one underlying principle: as token utilization through agents, agentic processes, LLM workflows, and more explodes within organizations, the management, analysis, and interaction with these agents and tokens will be the a large productivity blocker for organizations. Let’s call this the interface problem. Total productivity will soon be capped not by the capabilities of agents but instead by the humans responsible for navigating them.

Second, within many verticals (if not all), vertical transformation will depend upon two characteristics:

Minimizing the amount of humans in the value chain.

Maximizing the productivity of humans necessarily in the the value chain.

This points to I think two really distinct goals that vertical AI companies are running into these days:

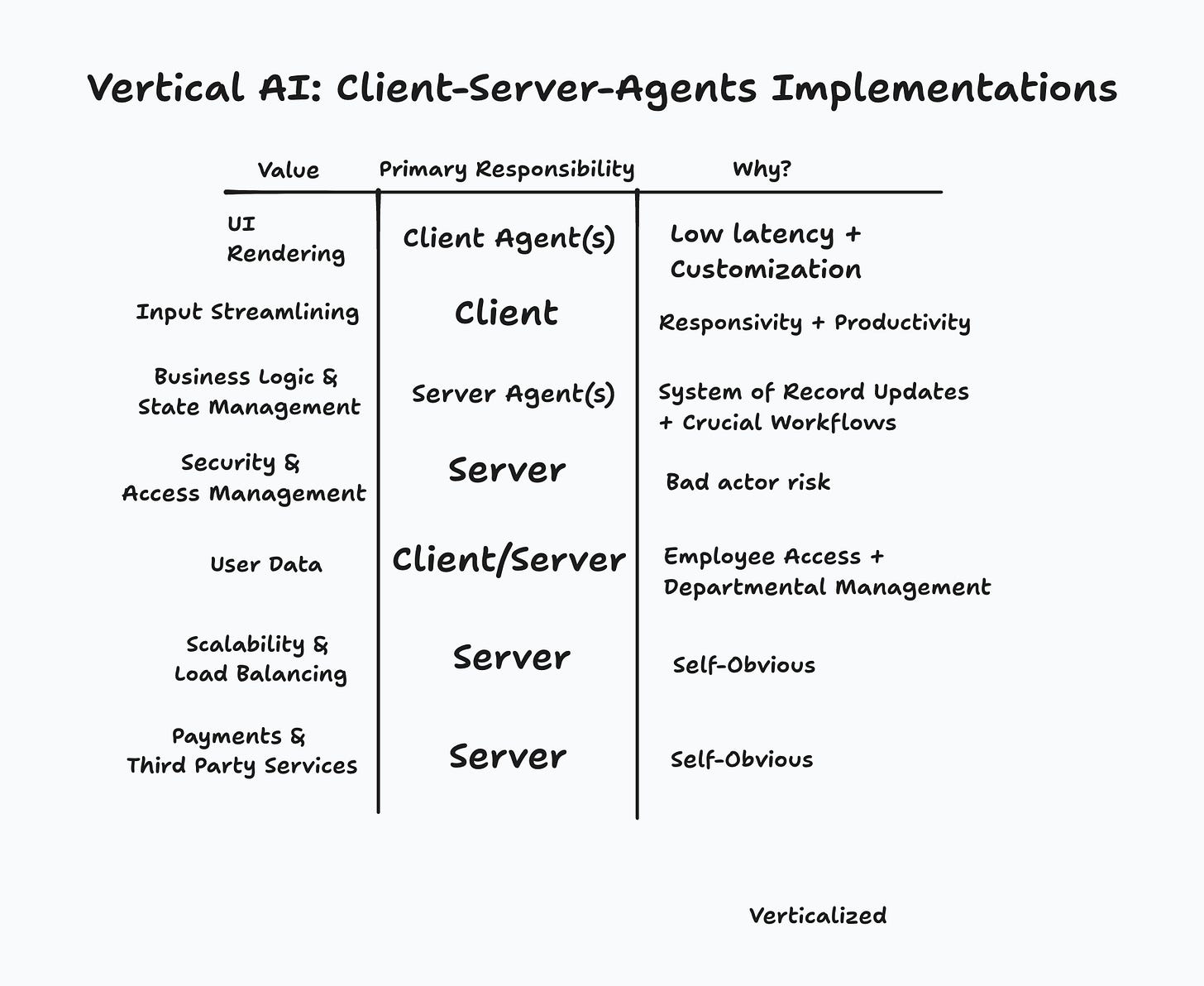

Agents on the client side

Agents on the server side (business logic)6

In short, I expect that shortly, client design and optimization will become a huge aspect of thoughtful vertical AI design.

Client-Side vs. Server-Side Agents

TL;DR:

Traditional vertical SaaS equates client UI with server logic—but that’s evolving.

The gaming industry’s mastery of client-side optimization offers valuable lessons for creating dynamic, responsive vertical AI interfaces.

Server-side agents aim to reduce human involvement, while client-side agents boost human productivity by streamlining interactions.

Client-side agents are key—they directly impact the user experience and productivity.

Let’s get this out of the way: Of course, every single web application is a client-server app.

What we really want to encapsulate here is a growing gap between what’s possible in vertical clients vs. managed on a server.

This isn’t a useless distinction. Currently in vertical SaaS (and really most SaaS), we don’t even think in terms of the client vs. the server. Vertical SaaS has been marked by nearly 1:1 fidelity between server side processes/workflows and what’s captured in the client experience.

We can see this fairly quickly when we compare current vertical SaaS with one of the most complex client-server implementations: gaming.

Gaming has spent 30+ years mastering the art of client-side processes vs. server-side processes. We can distill these into discrete goals:

Use local compute where possible7

Reduce latency and perceived lag wherever possible.

Enable a scalable and secure experience.

Handle and communicate relevant updates about the server to the player.

These goals are set because of the complexity of online gaming:

Servers can be simultaneously managing millions of players at once.

Latency or input lag is the worst thing a gamer can experience

If we analogize to the current enterprise or business, servers are informal. Currently, systems of record capture important data, but work happens outside the server and then gets logged back. Latency is driven by employees. Time is spent on waiting for an update from accounting, feedback on a deck from a boss, or correspondence with a customer.

All of this changes in an agentic world.

Agents are no longer 9-5 and work continuously. The number of agents will most likely outpace the number of humans.8 This will quickly mean humans are incapable of keeping track of all server-side agents. Simply put, they will need better tools to manage and interface with agents and processes.

In my view this is this single most exciting aspect of the AI revolution. AI is really about refactoring organizations and will be reliant upon the best clients. The opportunity in vertical AI revolves around helping specific industries build their clients to their agents.

Ultimately if Satya is correct that more and more of the business logic inside of current SaaS applications gets shifted towards agents, clients must be rethought to take advantage. These problems are non-trivial and in my view do not reduce to pure code generation. I believe moats can be found in the best clients.

Design Considerations

TL;DR:

Critical questions for client design: How do humans interact with systems, and how do they manage complex agentic processes?

Dynamic, context-aware interfaces (beyond static dashboards) are essential to reduce context-switching costs and tackle the interface problem.

I’ll be the first to admit: server-side vs. client-side agents is an imperfect distinction. As long as models are primarily accessible via an API, they’re all server-side.

What I am really trying to get at is a core distinction in value creation:

As we said at the outset, vertical transformation depends upon two characteristics:

Minimizing the amount of humans in the value chain. We refer to these as server-side.

Maximizing the productivity of humans in the value chain. These are client-side agents.

Client-side agents are the most fundamental ones to address because they are the ones that will most directly impact human operators and will represent the key bottlenecks on productivity.

These are the ones that are explicitly built and responsible for enhancing an individual’s productivity and ensuring their experience interfacing with backend automation is as streamlined as possible.

In short, most of these functions will ultimately revolve around personal productivity suites, panels into agent activity, and analytics around the work they are responsible for.

We can turn these into a series of questions that vertical clients must answer.

How will humans interact with clients and thus the server?

Above we have one answer for client-side agent interaction: it can happen anywhere. While Devin has a web app, it’s primary interactions happen inside of Slack. This simple design choice points to I think an important future: client value extends past its UI. Clients are interaction modules, callable from anywhere. Productivity is not going to be bound by your web app and therefore thoughtfully crafting client-side agent interactions is going to be a massive aspect of AI value creation. Defining the tone, capabilities, and callbacks for this agent will represent. How do you design the best co-worker for a busy human operator that doesn’t have time to keep track of all the backend agents going on?

How will humans manage or analyze agentic processes that they are responsible for?

If token utilization explodes, the central question becomes how employees in the value chain understand what the heck is going on inside of all the areas they were formerly responsible for. This is perhaps the area with the most alpha for development. It will require thoughtful UI to implement.

Balancing context-switching costs for humans will be more important for vertical AI than simply managing context windows for agents. The central debates will revolve around how dynamic the interfaces will be.

This is because the marginal cost of code generation is now zero. But the cost of bad code in businesses remains far too high. Dynamic vertical clients propose perhaps the way forward. Formerly, we have had hardcoded UIs full of dashboard views, management components, and more. The value of these Platonic components is still large. You need dashboards, you need management views, and more. But this too points to where the best vertical clients may go:

Have a Platonic Dashboard and leave the specific views, code generation, database querying and more up to the client-side agent and user. Your goal here is not to instantiate an agent for the entire business, but one that adapts to the specific needs of the user inside your platform.

I wrote this originally prior to DeepSeek and Deep Research. Times might be changing even more. Perhaps you don’t even need to build a full dashboard view. The path forward may very well be report generation on proprietary data with contextual dashboards rendered where appropriate.

The future is full of possibilities, businesses are all trying to get this stuff onboard. As agents become even more equipped, self-reproducing, and integrated with more and more tools, complexity explodes. Distilling this on the client-side for industries is perhaps the most important challenge in vertical AI today.

As always, love chatting through this stuff. Reach out if you want to continue the conversation.

This is particularly true in the SMB.

Reasoning models like o1/o3 explode the token count available to industries and therefore vertical agents exponentially.

Right now, it’s simply not worth thinking deeply about monetization strategies other than how they implicate this single-defining question: token usage maximization. All value creation and subsequent value capture will arise from being the place where token consumption occurs.

The average employee today is easily capable of consuming roughly 500,000 to 1m tokens a day. I think I easily could be underestimating this by a factor of 10. If that seems insane, my guess is you are underestimating the reasoning tokens in test-time based models and the sheer growth of knowledge work processes as models become more and more capable.

The goal at this point for vertical SaaS/AI will not be able to productize all business logic for a specific company. Businesses are stochastic outside of core processes and will refuse standardization of the entire surface area of their business. This whole line of argument can wait for another day but in short, token utility is going to correlate to business logic which you will not have perfect control over.

Of course, these are often blended but you get my drift.

I’m not expecting this to characterize systems right now, but depending on how intense test-time compute scaling and algorithmic efficiency becomes, there’s certainly going to be an argument for local compute for certain client-processes.

Specialization of labor still holds true in an AI-driven world. Whenever possible, companies will favor the highest eval at the lowest price point. This generally means that agents themselves will be consistently refactored into more specialized agents. My baseless speculation: this would still hold true in an ASI world.