Vertical RL Environments

game theory in an RL era

No posts over the weekend due to a new baby being welcomed into the family. Back to our regularly scheduled cadence now.

The Conditions Have Changed Materially

I’ve been mulling over the game theory between systems of record and AI. About a year back, there was heightened fear that systems of record were going to be displaced by AI systems entirely. And while I remain less than convinced that systems of record are best positioned for the wholesale transformation of work stemming from some superintelligence, we don’t seem anywhere close to that reality right now.

In fact, the new paradigmatic approach in AI implicitly seems to acknowledge that fast takeoff scenarios are out the window. Instead, current approaches are leveraging RL techniques to upregulate desirable behavior + reasoning in models. And these RL techniques are being leveraged via environments that essentially equip a model with a playground for some sort of activity; this could be an M&A negotiation playground, a Wordle simulator, or literally anything else. These environments come with verifiable rewards, grading mechanisms which the labs then utilize to essentially pick the correct reasoning traces that result in the most favorable negotiation, the smallest number of Wordle guesses, or again literally anything else.

Suffice to say, we don’t know a) if this generalizes as a technique, or b) the number of environments that are necessary to assemble the AI god. But we do know that it works. If there is an eval, the labs are going to ensure their model crushes the eval.

But the reason I bring this up is that it illuminates the new conditions that underly vertical strategy. Fast takeoffs seem improbable, model progress is contingent on RL post-training, and these techniques are contingent on having properly constructed environments where models can make meaningful progression.

This is dramatically different from a timeline where training across a corpus of internet data is the only thing that matters, generalized models become more and more capable with pre-training compute, and AI progress blows past nearly every single software company.

Now? It looks more and more like vertical expertise is going to become a defining feature of the path to better AI models.

Game Theory

Here’s the new game theory gist:

Let’s take it as a given that vertical companies win in perpetuity if a couple conditions hold true: 1

Better AI models do not ultimately lead to system of record obsolescence.2

AI interaction for a business is mediated through their vertical platform and as a result vertical companies are fast adopters of new AI capabilities.3

This second portion is where things get interesting in my view. Because in a world where model progress is dictated through eval and environment construction, the companies that get the most rapid progress in their domains are the companies that not only operate as wrappers for AI models but instead as advocates for model improvement on the core deliverables that their industry cares about.

I use the work “deliverables”… well deliberately: Every model release grows the number of discrete productized “deliverables” that companies can theoretically build and support for their customers. AI systems are concerned with action. Every useful action for an organization must end with something being handed over. The caliber of this deliverable is ultimately what the entire AI experience will be judged by.4

Round 1 of these deliverables has mostly been structured around customer communications. Voice and text models made this feasible and it became a relatively easy addition to justify.

Round 2 (you can make the case this was also a part of Round 1) of these deliverables has been something like “RAG systems” for internal company search.

Things now get tricky after Round 2:

Now we want agents that can accomplish long time horizon tasks that require tons of context, various decisions, and multimodal use of tools, data artifacts, and more. These capabilities are now highly industry contingent for the quality of the deliverable.

From here on out5, deliverable delivery from agents is contingent on a) high quality data, b) expert feedback on evaluation and deliverable quality, and c) simulated environments that mirror the industry deliverable closely enough to result in high agent quality.

Every party - end customers foundation labs, and vertical platforms should want this to happen.

After all, for the labs this means better models on the path to AGI, for vertical companies this means increased revenue and customer capture from these more capable models delivering work inside of their platform, and for customers it means productivity gains.

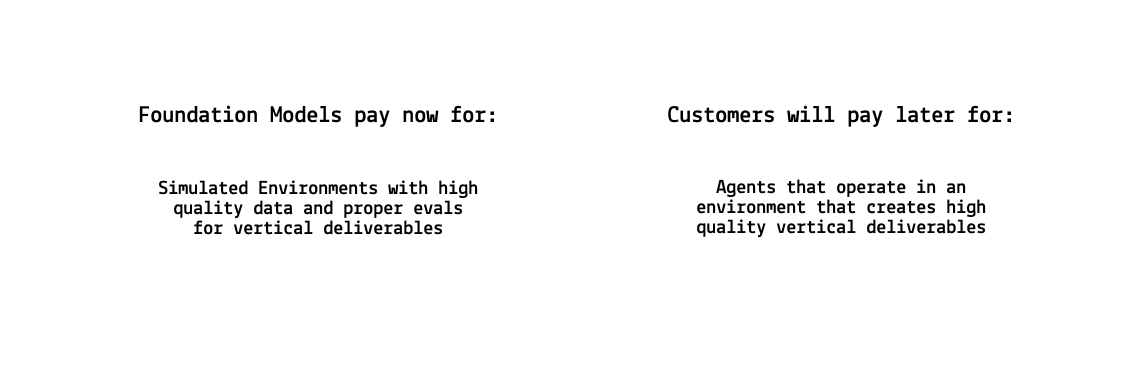

So vertical companies sit between two groups who both are ironically willing to pay for increased model capability. The labs and vertical company’s customers.

What’s the opportunity here?

This piece is already getting too long for a Wednesday piece. But is this some sort of opportunity for vertical companies to monetize the creation of RL environments for their industry that then they are positioned to sell to their customer nearly immediately upon model capability improvement?

I’d imagine some sort of the following scenario:

Foundation model companies need RL data

Vertical companies have a great view into industry deliverables and the simulated environment that is required to evaluate and train agent capability on this task.

Likewise, vertical companies have distribution into customers that have expertise in evaluating deliverable and data quality.6

The labs are essentially willing to subsidize this for the foreseeable future as the race for the model to rule them all continues. This largely functions as covered R&D expense for vertical companies. I want to highlight just how unusual this is: the labs are willing to pay vertical experts to build models that vertical software companies will likely end up bringing to market as fully packaged products for an industry with revenue payoffs easily eclipsing tens of millions in ARR per year.7

The sheer number of deliverables where we likely need verifiable rewards and training data is insane. If we were to just take the construction sector, we are immediately looking at dozens if not hundreds of potential model capabilities that need to be developed. Mercor’s sentiment is not entirely wrong: The economy will become an RL Environment Machine.

Once the model is newly capable, the environment can be repackaged from a simulation into a product in the vertical. This easily can result in a large revenue uplift. And you can likewise imagine the labs prioritizing vertical companies that give them the best RL environments for the first look at new model capabilities.

Now there are various challenges with this ranging from time vs. effort, partnership negotiations, environment creation8, legal and compliance challenges, and more.

But I think there’s something here. And I even wonder if the challenges that come to mind are solvable via a company who essentially operates as an embedded environment creator and middleman between the labs and vertical companies.

The opportunity for that company seems quite large and there’s some really interesting strategies to be run if the market for something like this is there. And because everyone right now is incentivized towards enhanced model capabilities with large revenue payoffs for all involved, I’m eager to explore this more. If this piques your interest even in this abstract piece, I’d love to hear from you.

Everyone wants models to get better. Everyone needs increased capabilities in their specific sector, the labs also want this, the labs are willing to pay to make this happen, and finally vertical companies likely become the biggest beneficiaries: if models advance, their moats get stronger as long as they have proprietary access. - which may partly stem from being the advocates and expeditors for model progress through creating the next thousand RL environments on the path to AGI.

I make no distinction here between vertical AI and vertical SaaS companies here. Both are likely to attempt the same goal from different directions: become a unified system of record and action.

Ie databases still matter. You could likewise add some subpremises here around "AI doesn’t result in lower maintenance costs for self-rolled systems, etc.

Ie, ChatGPT as a form factor for accomplishing work is a worse experience than doing similar model based work in a native environment built for your industry.

Of course, here we are talking about deliverables inside the workflows that vertical SaaS companies track. The paperwork, analyses, projects, customer relationships, and more.

Absent a completely novel approach that solves continual learning.

I’d imagine some sort of “Labs” entity that vertical companies spin up to run Mercor type labeling strategies in their vertical.

The more capabilities, the more pricing power too.

Do many vertical companies have the ML talent to do this?

Congrats on the new addition to the family!